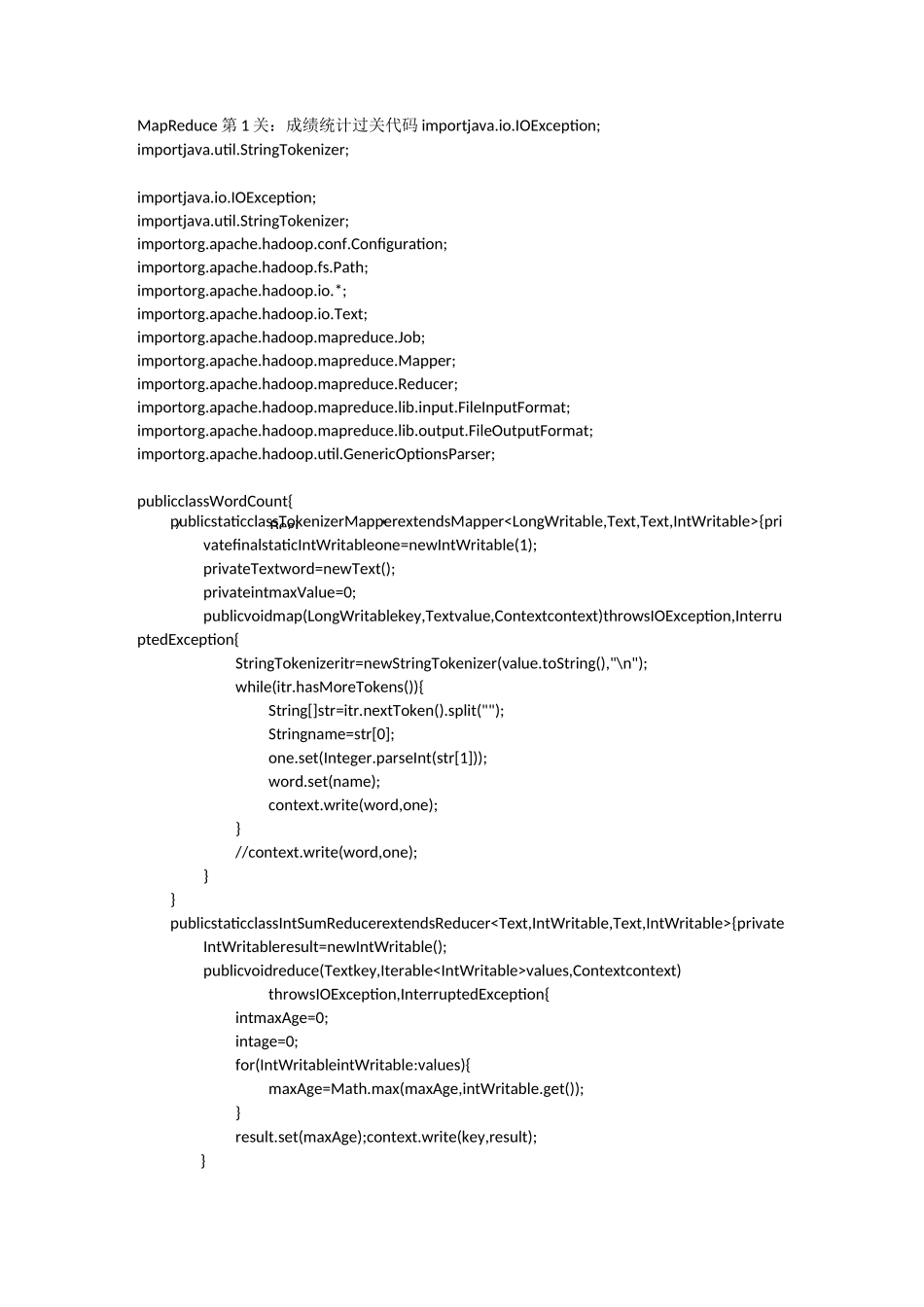

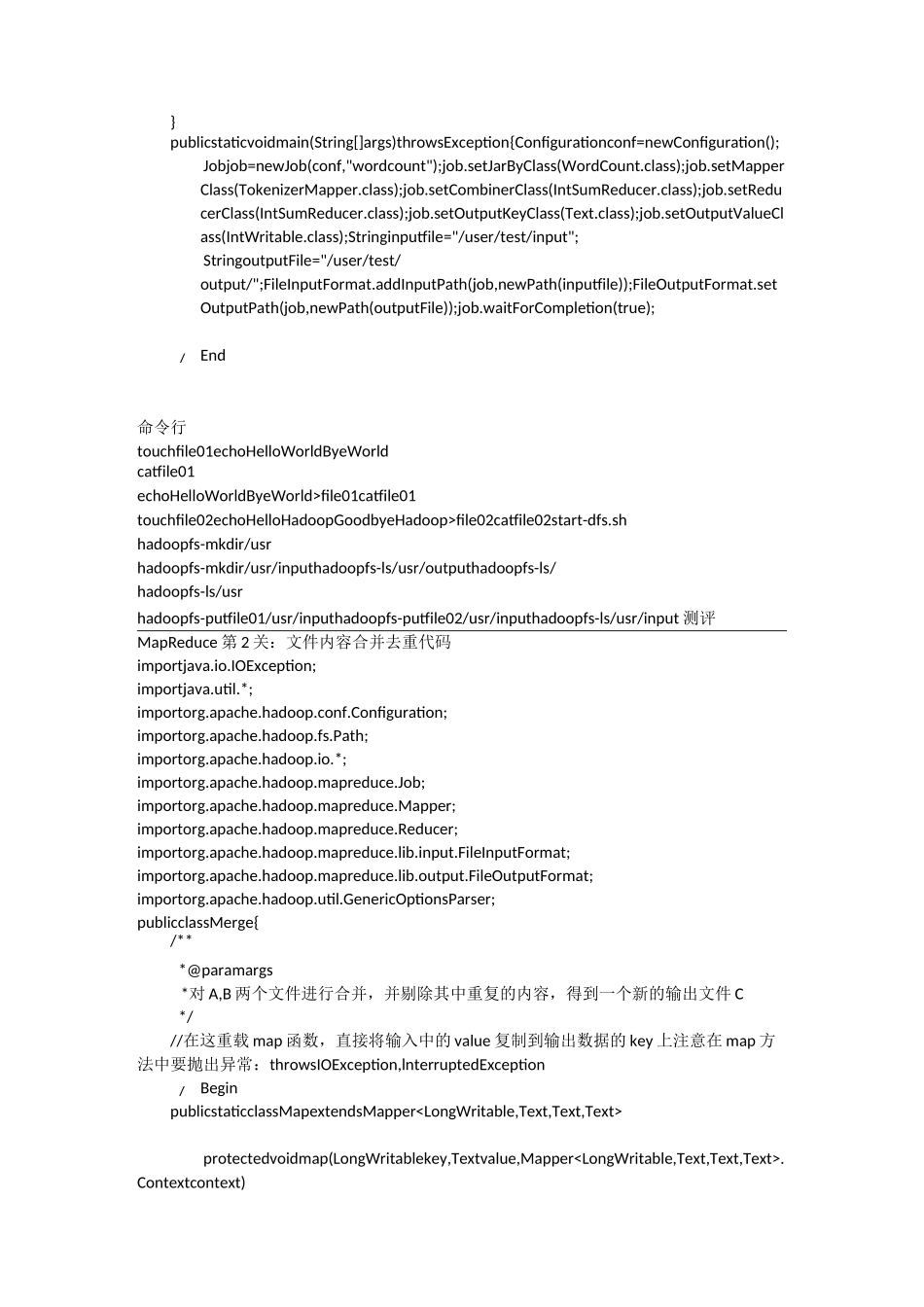

/Begi*MapReduce 第 1 关:成绩统计过关代码 importjava.io.IOException;importjava.util.StringTokenizer;importjava.io.IOException;importjava.util.StringTokenizer;importorg.apache.hadoop.conf.Configuration;importorg.apache.hadoop.fs.Path;importorg.apache.hadoop.io.*;importorg.apache.hadoop.io.Text;importorg.apache.hadoop.mapreduce.Job;importorg.apache.hadoop.mapreduce.Mapper;importorg.apache.hadoop.mapreduce.Reducer;importorg.apache.hadoop.mapreduce.lib.input.FileInputFormat;importorg.apache.hadoop.mapreduce.lib.output.FileOutputFormat;importorg.apache.hadoop.util.GenericOptionsParser;publicclassWordCount{publicstaticclassTokenizerMapperextendsMapper{privatefinalstaticIntWritableone=newIntWritable(1);privateTextword=newText();privateintmaxValue=0;publicvoidmap(LongWritablekey,Textvalue,Contextcontext)throwsIOException,InterruptedException{StringTokenizeritr=newStringTokenizer(value.toString(),"\n");while(itr.hasMoreTokens()){String[]str=itr.nextToken().split("");Stringname=str[0];one.set(Integer.parseInt(str[1]));word.set(name);context.write(word,one);}//context.write(word,one);}}publicstaticclassIntSumReducerextendsReducer{privateIntWritableresult=newIntWritable();publicvoidreduce(Textkey,Iterablevalues,Contextcontext)throwsIOException,InterruptedException{intmaxAge=0;intage=0;for(IntWritableintWritable:values){maxAge=Math.max(maxAge,intWritable.get());}result.set(maxAge);context.write(key,result);}//}publicstaticvoidmain(String[]args)throwsException{Configurationconf=newConfiguration();Jobjob=newJob(conf,"wordcount");job.setJarByClass(WordCount.class);job.setMapperClass(TokenizerMapper.class);job.setCombinerClass(IntSumReducer.class);job.setReducerClass(IntSumReducer.class);job.setOutputKeyClass(Text.class);job.setOutputValueClass(IntWritable.class);Stringinputfile="/user/test/input";StringoutputFile="/user/test/output/";FileInputFormat.addInputPath(job,newPath(inputfile));FileOu...