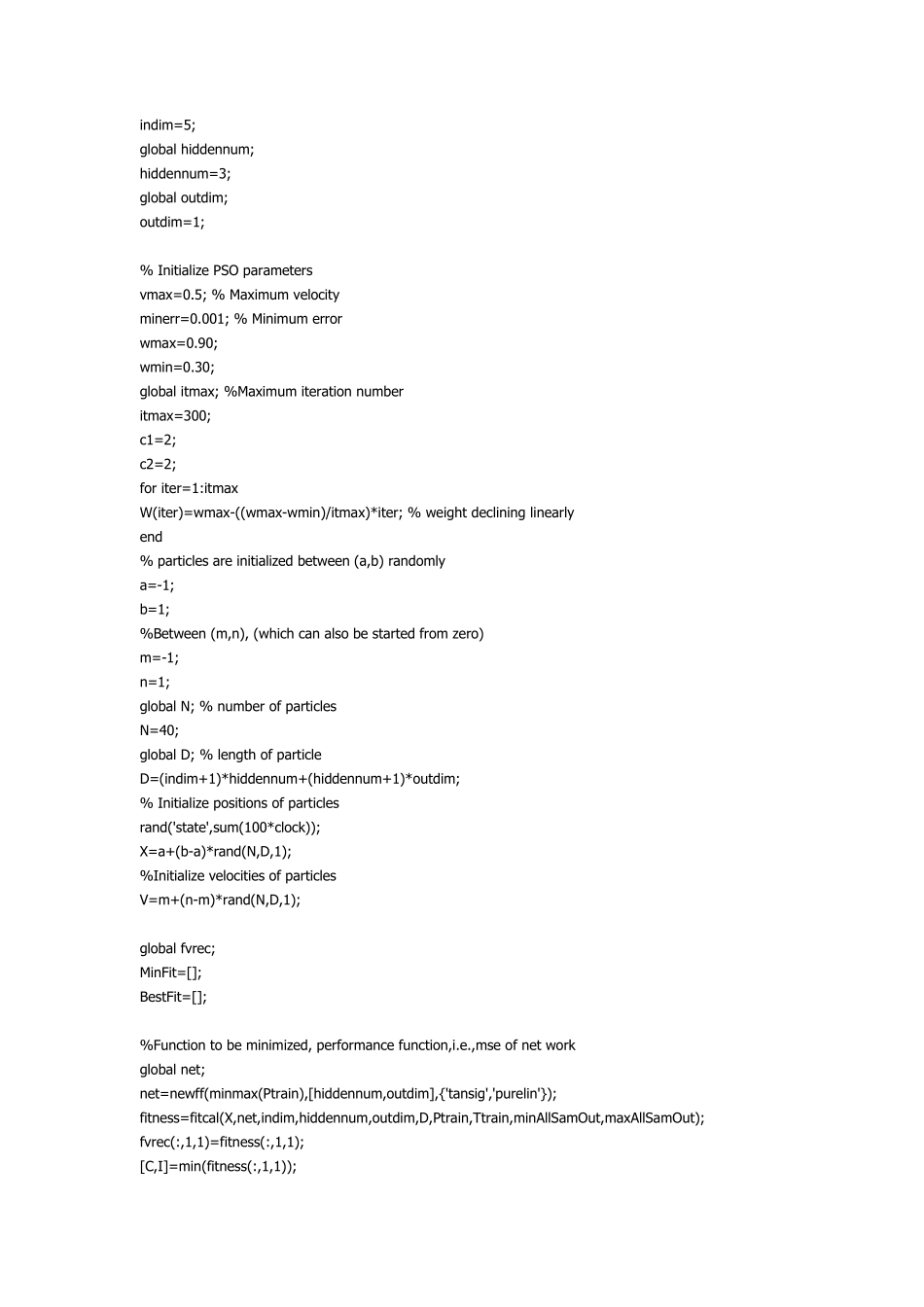

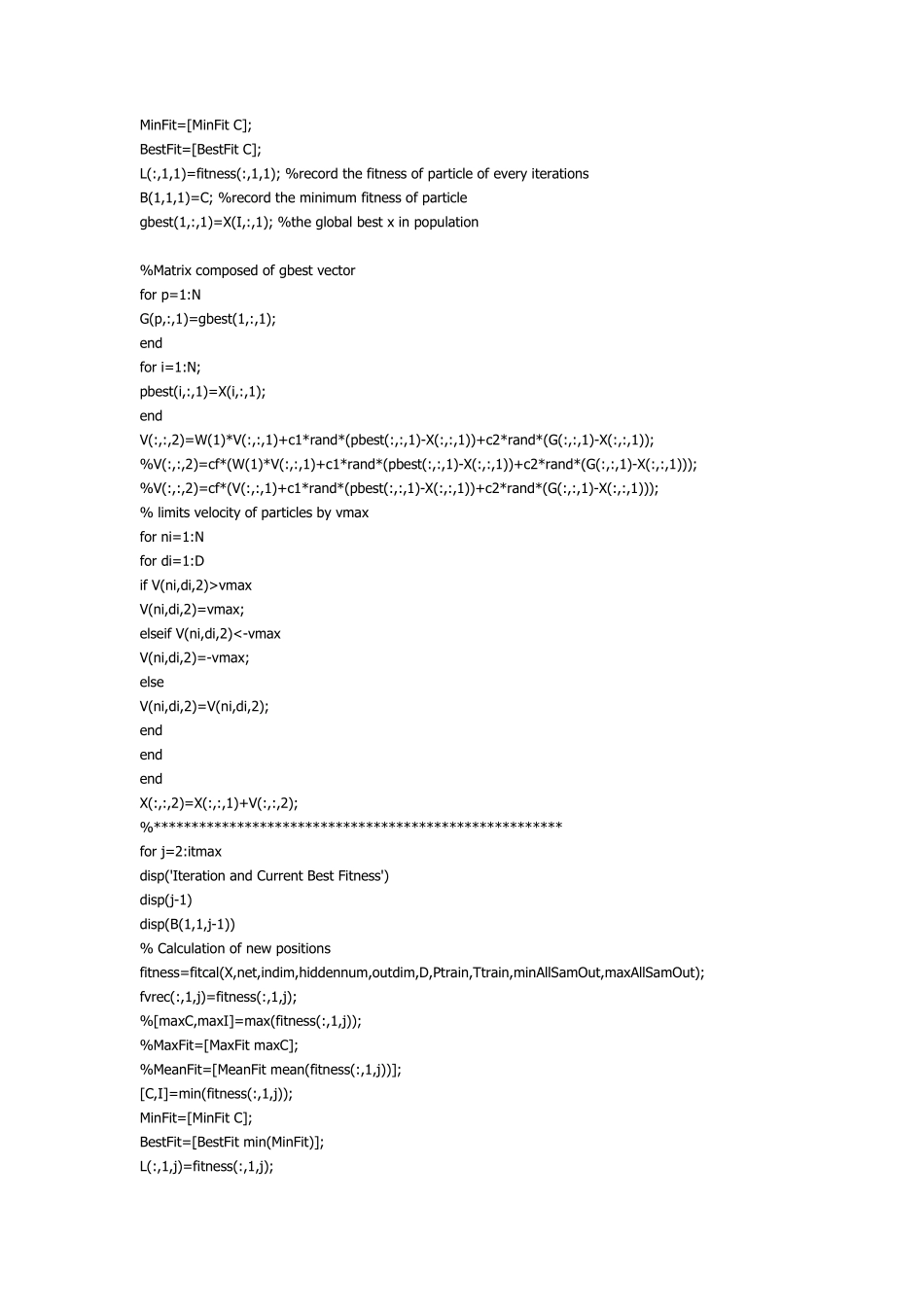

function psobp % BP neural network trained by PSO algorithm % Copyright by Deng Da-Peng @ 2005 % Email: rexdeng@163.com % You can change and distribute this code freely for academic usage % Business usage is strictly prohibited clc clear all AllSamIn=...; % Add your all input data AllSamOut-...; % Add your all output data % Pre-processing data with premnmx, you can use other functions global minAllSamOut; global maxAllSamOut; [AllSamInn,minAllSamIn,maxAllSamIn,AllSamOutn,minAllSamOut,maxAllSamOut] = premnmx(AllSamIn,AllSamOut); % draw 10 percent from all samples as testing samples,the rest as training samples i=[10:10:1000]; TestSamIn=[]; TestSamOut=[]; for j=1:100 TestSamIn=[TestSamIn,AllSamInn(:,i(j))]; TestSamOut=[TestSamOut,AllSamOutn(:,i(j))]; end TargetOfTestSam=...; % add reall output of testing samples TrainSamIn=AllSamInn; TrainSamOut=AllSamOutn; TrainSamIn(:,i)=[]; TrainSamOut(:,i)=[]; % Evaluating Sample EvaSamIn=... EvaSamInn=tramnmx(EvaSamIn,minAllSamIn,maxAllSamIn); % preprocessing global Ptrain; Ptrain = TrainSamIn; global Ttrain; Ttrain = TrainSamOut; Ptest = TestSamIn; Ttest = TestSamOut; % Initialize BPN parameters global indim; indim=5; global hiddennum; hiddennum=3; global outdim; outdim=1; % Initialize PSO parameters vmax=0.5; % Maximum velocity minerr=0.001; % Minimum error wmax=0.90; wmin=0.30; global itmax; %Maximum iteration number itmax=300; c1=2; c2=2; for iter=1:itmax W(iter)=wmax-((wmax-wmin)/itmax)*iter; % weight declining linearly end % particles are initialized between (a,b) randomly a=-1; b=1; %Between (m,n), (which can also be started from zero) m=-1; n=1; global N; % number of particles N=40; global D; % length of particle D=(indim+1)*hiddennum+(hiddennum+1)*outdim; % Initialize positions of particles rand('state',sum(10...