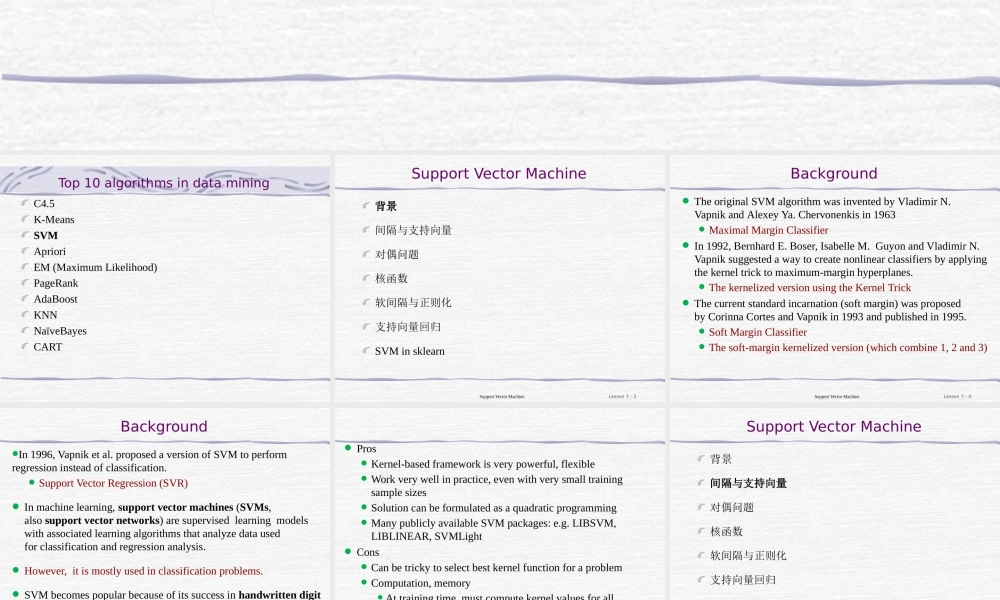

Foundations of Machine LearningSupport Vector Machine (支持向量机)Top 10 algorithms in data miningC4.5K-MeansSVMAprioriEM (Maximum Likelihood)PageRankAdaBoostKNNNaïveBayesCARTSupport Vector Machine背景间隔与支持向量对偶问题核函数软间隔与正则化支持向量回归SVM in sklearnSupport Vector MachineLesson 7 - 3Background The original SVM algorithm was invented by Vladimir N. Vapnik and Alexey Ya. Chervonenkis in 1963 Maximal Margin Classifier In 1992, Bernhard E. Boser, Isabelle M. Guyon and Vladimir N. Vapnik suggested a way to create nonlinear classifiers by applying the kernel trick to maximum-margin hyperplanes. The kernelized version using the Kernel Trick The current standard incarnation (soft margin) was proposed by Corinna Cortes and Vapnik in 1993 and published in 1995. Soft Margin Classifier The soft-margin kernelized version (which combine 1, 2 and 3)Support Vector MachineLesson 7 - 4BackgroundIn 1996, Vapnik et al. proposed a version of SVM to perform regression instead of classification. Support Vector Regression (SVR) In machine learning, support vector machines (SVMs, also support vector networks) are supervised learning models with associated learning algorithms that analyze data used for classification and regression analysis. However, it is mostly used in classification problems. SVM becomes popular because of its success in handwritten digit recognition Support Vector MachineLesson 7 - 5 Pros Kernel-based framework is very powerful, flexible Work very well in practice, even with very small training sample sizes Solution can be formulated as a quadratic programming Many publicly available SVM packages: e.g. LIBSVM, LIBLINEAR, SVMLight Cons Can be tricky to select best kernel function...