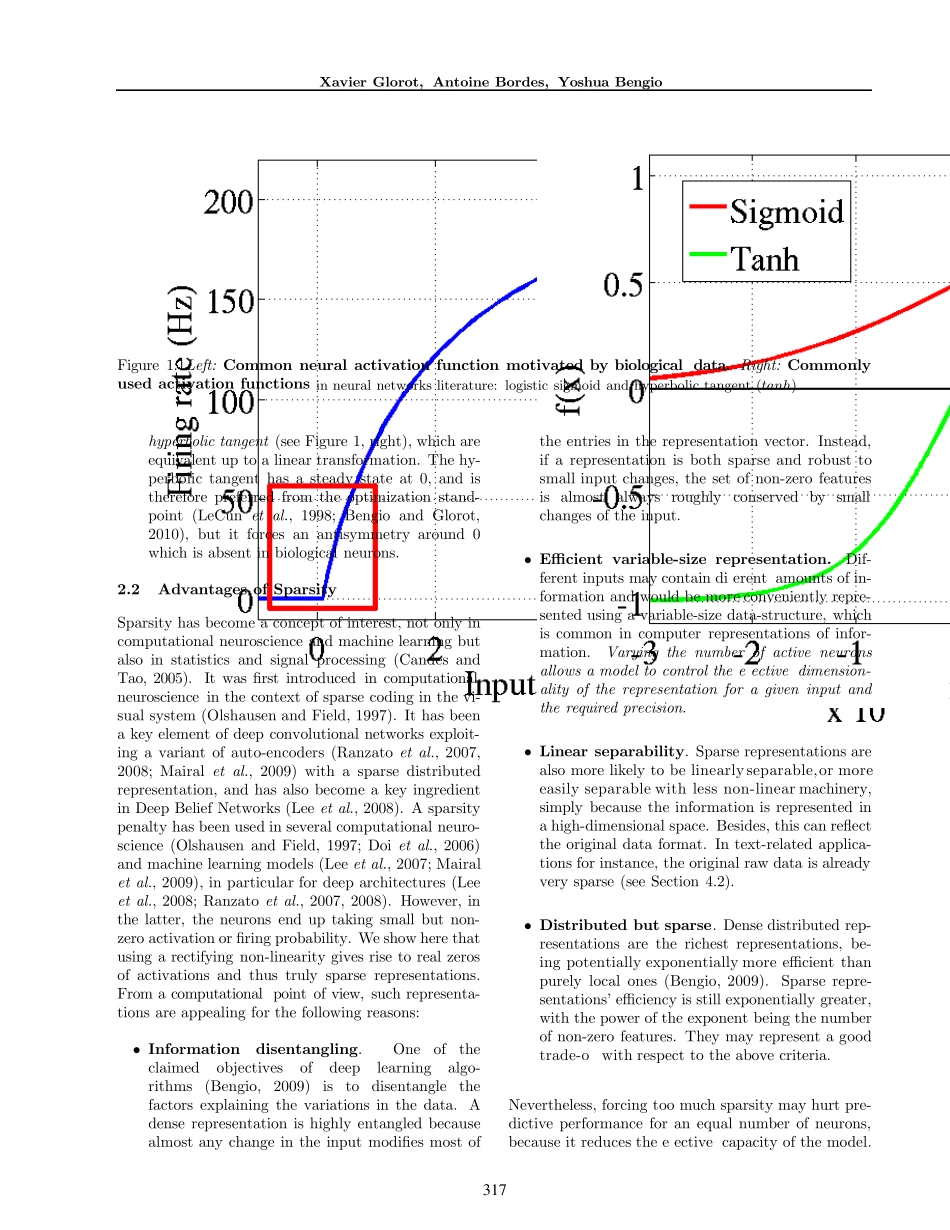

3 1 5Deep Sparse Rectifier Neural Netw orksXavier GlorotAntoine BordesYoshua BengioDIRO, Universit´e de Montr´ealMontr´eal, QC, Canadaglorotxa@iro.umontreal.caHeudiasyc, UMR CNRS 6599UTC, Compi`egne, FranceandDIRO, Universit´e de Montr´ealMontr´eal, QC, Canadaantoine.bordes@hds.utc.frDIRO, Universit´e de Montr´ealMontr´eal, QC, Canadabengioy@iro.umontreal.caAbstractWhile logistic sigmoid neurons are more bi-ologically plausible than hyperbolic tangentneurons, the latter work better for train-ing multi-layer neural networks.This pa-per shows that rectifying neurons are aneven better model of biological neurons andyield equal or better performance than hy-perbolic tangent networks in spite of thehard non-linearity and non-differentiabilityat zero, creating sparse representations withtrue zeros, which seem remarkably suitablefor naturally sparse data. Even though theycan take advantage of semi-supervised setupswith extra-unlabeled data, deep rectifier net-works can reach their best performance with-out requiring any unsupervised pre-trainingon purely supervised tasks with large labeleddatasets. Hence, these results can be seen asa new milestone in the attempts at under-standing the diffi culty in training deep butpurely supervised neural networks, and clos-ing the performance gap between neural net-works learnt with and without unsupervisedpre-training.1IntroductionMany differencesexist between the neural networkmodels used by machine learning researchers and thoseused by computational neuroscientists. This is in partAppearing in Proceedings of the 14th International Con-ference on Artificial Intelligence and Statistics (AISTATS)2011, Fort Lauderdale,FL, USA.Volume 15 of JMLR:W&CP 15. Copyright 2011 by the authors.because the objective of the former is t...