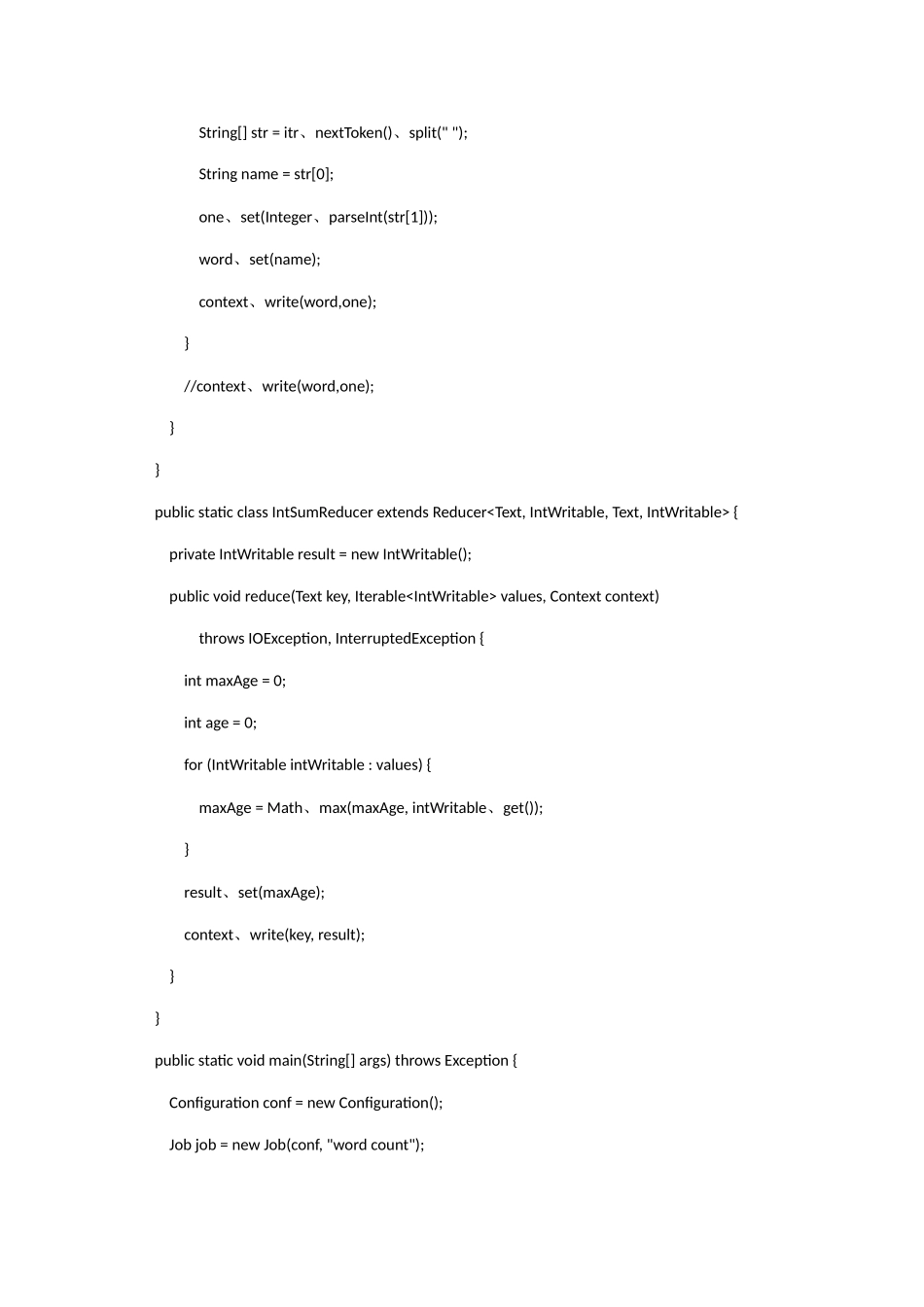

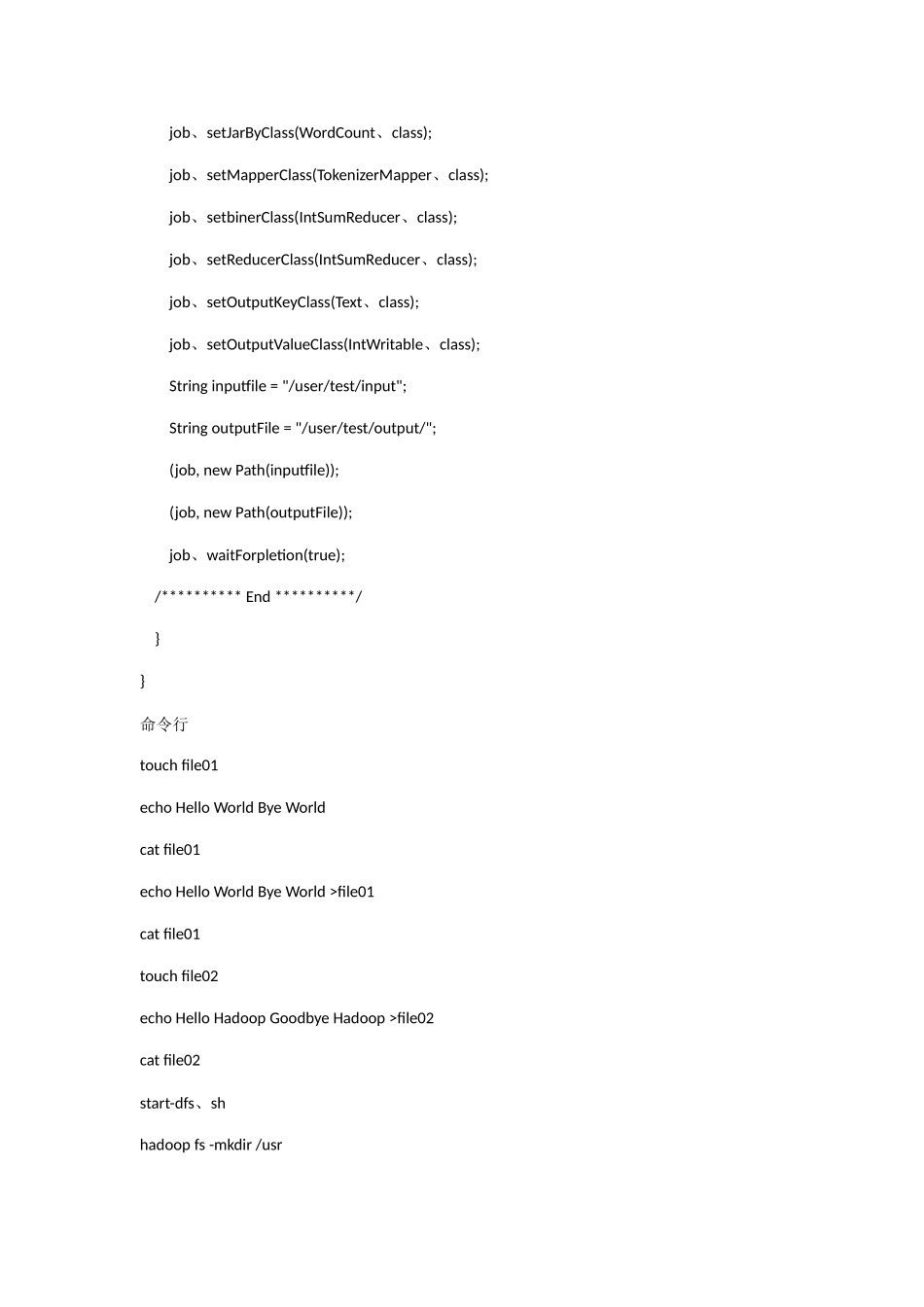

MapReduce 第 1 关:成绩统计过关代码:import java、io、IOException;import java、util、StringTokenizer;import java、io、IOException;import java、util、StringTokenizer;import org、apache、hadoop、conf、Configuration;import org、apache、hadoop、fs、Path;import org、apache、hadoop、io、*;import org、apache、hadoop、io、Text;import org、apache、hadoop、mapreduce、Job;import org、apache、hadoop、mapreduce、Mapper;import org、apache、hadoop、mapreduce、Reducer;import org、apache、hadoop、mapreduce、lib、input、;import org、apache、hadoop、mapreduce、lib、output、;import org、apache、hadoop、util、GenericOptionsParser;public class WordCount {/********** Begin **********/ public static class TokenizerMapper extends Mapper { private final static IntWritable one = new IntWritable(1); private Text word = new Text(); private int maxValue = 0; public void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { StringTokenizer itr = new StringTokenizer(value、toString(),"\n"); while (itr、hasMoreTokens()) { String[] str = itr、nextToken()、split(" "); String name = str[0]; one、set(Integer、parseInt(str[1])); word、set(name); context、write(word,one); } //context、write(word,one); } } public static class IntSumReducer extends Reducer { private IntWritable result = new IntWritable(); public void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException { int maxAge = 0; int age = 0; for (IntWritable intWritable : values) { maxAge = Math、max(maxAge, intWritable、get()); } result、set(maxAge); context、write(key, result); } } public static void main(String[] args) throws Exception { Configuration conf = new Configuration(); Job job = new Job(conf, "word count"); job...