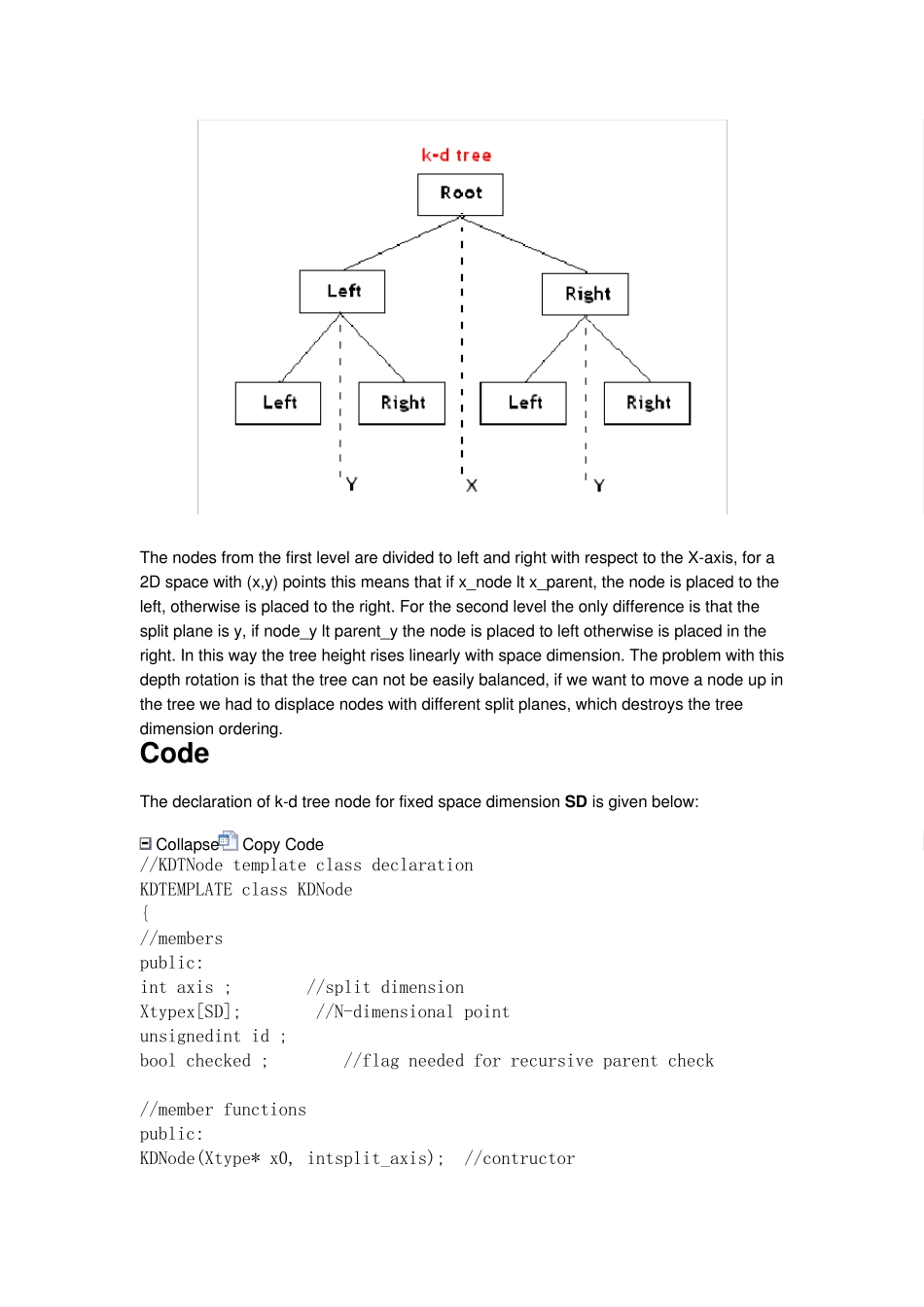

Introduction There is many real-life problems which requires fast analyses and fast search in multidimensional data. The most popular way used for this problem is the so called k-d tree. It has the advantage that is easy to built and has a simple algorithm for closest points and ranged search. In this article I had studied the performance of the k-d tree for nearest-neighbour search. The analyses shows that k-d works quite well for small dimensions. However the number of the searched nodes rises exponentially with the space dimension and for N>10 k-d may become too slow. Another drawback is that the bassic k-d tree do not allows balancing, you should reorder the entire tree periodically to improve it balancing, which is not convenient for changing data and large datasets. There is some extensions of k-d which makes possible run-time balancing, but the problem with the big dimensions remains as well for all k-d variations. This leaves a space for new ideas and algorithms, with this article I hope to start a discussion about the k-d enhancements and more effective solutions of the multidimensional nearest neighbour problem. The sources contain C++ template of k-d tree and the project which I used to test the tree. If you are not familiar with the binary trees you can read my introduction article about binary trees here. Background First I will define the problem which the KD solves. Lets have a N-dimensional point P with coordinates Px,Py.....Pn. The point coordinates are in real orthogonal and normalized cartesian space (I suppose that the KD tree can be built for arbitrary space as long as a distance() function is provided). We search in a dataset for all points which are inside a ...